Data cleansing - Enhance your data quality

Most of time, there is inaccurate or wrong data due to system bugs or human-input error. In fact, these kind of unexpected error usually occurs in our database and occupied certain proportion of overall observations.

"Quality data, quality results." This statement is valid especially when we generate the summary report with statistics. Any missing or duplicated records could mislead the statistical results. Additionally, we sometimes find the abnormal value for some attribute (say zero transaction amount) in a record.

For predictive modelling in machine learning, outliers with extreme value are kicked out besides the improper data to prevent from affecting the model performance.

When studied in the university, I usually neglected the importance of cleansing the data. The reason seems to be my curiosity of studying the analytical method is far away from that of processing the data. Of course, I have learned the lesson from my own jobs and awarded the significance of data preparation. In real working condition, we should bear in mind that it is impossible to have the data sources with perfect quality. Having extracted the data sets from the database, you would find some unexpected and unreasonable values in the records. In order to attain a quality result of not only data analytic and model of data mining, it is necessary to modify and clean this dirty data.

But how to evaluate the data or information whether is reasonable and good enough to be analyzed. It is indisputable that the property of data in the attributes could be verified in line with industry knowledge and experience. Some industrial professionals are competent to determine the irrational values even if they do not know any statistical knowledge. That are the reasons why some articles indicate that data scientists possessing corresponding industry and business knowledge is a plus.

Data Quality

There are indicators of data quality listed below:

1. Accuracy

Accurate data could reflect the objects or events in actual environment. How come we obtain the precise results from inaccurate input? The data containing any inaccurate data results in inappropriate and misleading information.

2. Validity

Data can be validated by a wide variety of methods that checking the data type, formats, logic and lump sum etc. For instance, the non-integer value is found in the quantity of objects. This record is treated as invalid data in this attribute.

3. Consistency

After integrated data from different sources, the quality data should keep consistent to the data sources. Suppose you have graduated from high school or university are one of the members in a store. Inconsistent data was found that the status still shows "Student" in the membership profile.

4. Redundancy

Quality data consists of the least redundant records. The attributes with unique fields cannot tolerate any duplicated records which not only lead to bias but also affect the accuracy of the results. If two identical transaction records occurs in the invoice you issued, of course, your customer have to refuse to pay double and complain. The summary results are also misled when you analyzed the sales transaction,

5. Completeness

Complete data should consists of attributes meets user expectation. There are some questions with compulsory field in the online registration form to fill. Therefore, the receiver (user) is able to ensure the completeness of your information.

6. Timeliness

Timeliness of the data also depends on user expected. Obviously, the latest and real-time information is most preferred to receive by execution team.

Cleansing the data

Missing value

In reality, some customers are not willing to disclosure their personal information to protect the privacy. As a consequence, some value in some attributes was left blank in the customers profile. Furthermore, some data fields are not applicable to specific entity. For instant, the attribute: membership type should be blank for non-membership customer in the customer profile.

To handle these disappeared data, it is impossible for us to search and then verify the true value for each missing records. Imagining that the big data sources posses 1,000,000 row and missing data was found in only 1% of the total.If you were rich and young enough to get to the bottom for 10,000 records, just to do it.

Generally, the simplest and easiest way is to filter the whole record with missing value. This is so-called sight for the net. Nevertheless, that could result in bias of the sample, ignorance of useful information and even the Coefficient of determination (R square) are reduced if too much missing records existing in the sources.

Another common method is to estimate the interval variables by single or multiple imputation methods.

Single Imputation including 4 methods:

1. Imputing the unconditional mean (Replace by the mean of observed data)

2. Imputing from the unconditional distribution (Draws from distribution of observed data)

3. Imputing the conditional mean (Regression imputation by model)

4. Imputing from conditional distribution (Draws from distribution of missing data given the observed data)

Multiple Imputation including 3 steps:

1. Imputing from conditional distribution and replacing to the missing value

2. Repeating to construct several completed data-set with step 1

3. Combine the results of statistics and obtaining the conditional distribution of missing value

4. Imputing from this conditional distribution

For class variables, the values can be imputed by:

1. Mode, the most frequent occurrence

2. Draw from random percentiles of distribution

3. Imputed from decision tree

Duplicate record / Inconsistent data

De-duplicate the redundant records by analyzing the values of attribute. If the duplicated records are identical, drop and retain only one record. Otherwise, taking the average values for interval (numeric) variables or taking the mode for class variables could be adopted.

Noisy data

The data modified its original value is called Noisy data. To smoothen the noisy data, several method is suggested below:

1. Binning: Sort and divided into bins to smoothen the data by their mean, median, mode

2. Regression: Fitting the data in regression model and smooth the data

Outlier

Outlier describes the data objects with a huge different characteristics from most of other data. It depends on what purpose or objectives. If you hope to detect the extreme or extraordinary case and identify the reason of that, the outliers should not be retained in the data set. On the contrary, you have to click out them once you are building a predictive model especially which is sensitive to outlier. To detect the outlier, general practises are as follow:

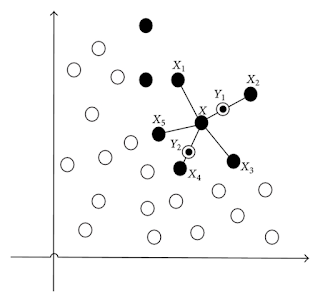

1. Clustering: the long distance between the center of clusters and the data points

2. Computer & Human Inspection: computer aids to detect the suspicious value and then verify by experience of human

Conclusion

To sum up, data cleansing is indispensable to ensure the quality of data. Despite being proficient of wide variety of algorithm, the models performances is affected by incomplete, inconsistent and extraordinary data at the certain extent. Additionally, you may find some models for prediction/classification, which also are applied to impute the missing or smoothen the extreme value. Therefore, models of machine learning are not limited to predict or classify the targets accurately. For more details of these models, you are recommended to refer to other articles with the topics of machine learning.

"Quality data, quality results." This statement is valid especially when we generate the summary report with statistics. Any missing or duplicated records could mislead the statistical results. Additionally, we sometimes find the abnormal value for some attribute (say zero transaction amount) in a record.

For predictive modelling in machine learning, outliers with extreme value are kicked out besides the improper data to prevent from affecting the model performance.

When studied in the university, I usually neglected the importance of cleansing the data. The reason seems to be my curiosity of studying the analytical method is far away from that of processing the data. Of course, I have learned the lesson from my own jobs and awarded the significance of data preparation. In real working condition, we should bear in mind that it is impossible to have the data sources with perfect quality. Having extracted the data sets from the database, you would find some unexpected and unreasonable values in the records. In order to attain a quality result of not only data analytic and model of data mining, it is necessary to modify and clean this dirty data.

But how to evaluate the data or information whether is reasonable and good enough to be analyzed. It is indisputable that the property of data in the attributes could be verified in line with industry knowledge and experience. Some industrial professionals are competent to determine the irrational values even if they do not know any statistical knowledge. That are the reasons why some articles indicate that data scientists possessing corresponding industry and business knowledge is a plus.

Data Quality

There are indicators of data quality listed below:

1. Accuracy

Accurate data could reflect the objects or events in actual environment. How come we obtain the precise results from inaccurate input? The data containing any inaccurate data results in inappropriate and misleading information.

2. Validity

Data can be validated by a wide variety of methods that checking the data type, formats, logic and lump sum etc. For instance, the non-integer value is found in the quantity of objects. This record is treated as invalid data in this attribute.

3. Consistency

After integrated data from different sources, the quality data should keep consistent to the data sources. Suppose you have graduated from high school or university are one of the members in a store. Inconsistent data was found that the status still shows "Student" in the membership profile.

4. Redundancy

Quality data consists of the least redundant records. The attributes with unique fields cannot tolerate any duplicated records which not only lead to bias but also affect the accuracy of the results. If two identical transaction records occurs in the invoice you issued, of course, your customer have to refuse to pay double and complain. The summary results are also misled when you analyzed the sales transaction,

5. Completeness

Complete data should consists of attributes meets user expectation. There are some questions with compulsory field in the online registration form to fill. Therefore, the receiver (user) is able to ensure the completeness of your information.

6. Timeliness

Timeliness of the data also depends on user expected. Obviously, the latest and real-time information is most preferred to receive by execution team.

Cleansing the data

Missing value

In reality, some customers are not willing to disclosure their personal information to protect the privacy. As a consequence, some value in some attributes was left blank in the customers profile. Furthermore, some data fields are not applicable to specific entity. For instant, the attribute: membership type should be blank for non-membership customer in the customer profile.

To handle these disappeared data, it is impossible for us to search and then verify the true value for each missing records. Imagining that the big data sources posses 1,000,000 row and missing data was found in only 1% of the total.

Generally, the simplest and easiest way is to filter the whole record with missing value. This is so-called sight for the net. Nevertheless, that could result in bias of the sample, ignorance of useful information and even the Coefficient of determination (R square) are reduced if too much missing records existing in the sources.

Another common method is to estimate the interval variables by single or multiple imputation methods.

Single Imputation including 4 methods:

1. Imputing the unconditional mean (Replace by the mean of observed data)

2. Imputing from the unconditional distribution (Draws from distribution of observed data)

3. Imputing the conditional mean (Regression imputation by model)

4. Imputing from conditional distribution (Draws from distribution of missing data given the observed data)

Multiple Imputation including 3 steps:

1. Imputing from conditional distribution and replacing to the missing value

2. Repeating to construct several completed data-set with step 1

3. Combine the results of statistics and obtaining the conditional distribution of missing value

4. Imputing from this conditional distribution

For class variables, the values can be imputed by:

1. Mode, the most frequent occurrence

2. Draw from random percentiles of distribution

3. Imputed from decision tree

Duplicate record / Inconsistent data

De-duplicate the redundant records by analyzing the values of attribute. If the duplicated records are identical, drop and retain only one record. Otherwise, taking the average values for interval (numeric) variables or taking the mode for class variables could be adopted.

Noisy data

The data modified its original value is called Noisy data. To smoothen the noisy data, several method is suggested below:

1. Binning: Sort and divided into bins to smoothen the data by their mean, median, mode

2. Regression: Fitting the data in regression model and smooth the data

Outlier

Outlier describes the data objects with a huge different characteristics from most of other data. It depends on what purpose or objectives. If you hope to detect the extreme or extraordinary case and identify the reason of that, the outliers should not be retained in the data set. On the contrary, you have to click out them once you are building a predictive model especially which is sensitive to outlier. To detect the outlier, general practises are as follow:

1. Clustering: the long distance between the center of clusters and the data points

2. Computer & Human Inspection: computer aids to detect the suspicious value and then verify by experience of human

Conclusion

To sum up, data cleansing is indispensable to ensure the quality of data. Despite being proficient of wide variety of algorithm, the models performances is affected by incomplete, inconsistent and extraordinary data at the certain extent. Additionally, you may find some models for prediction/classification, which also are applied to impute the missing or smoothen the extreme value. Therefore, models of machine learning are not limited to predict or classify the targets accurately. For more details of these models, you are recommended to refer to other articles with the topics of machine learning.

Comments

Post a Comment