Data Mining - Overview of data mining process

As mentioned in previous article, data mining, as known as Knowledge Discovery in Database, is process of extracting useful information from unstructured data. The data mining model is also included but not limited to machine learning algorithm.

How does it work? To understand about the big picture of data mining process, this is visualized as below:

Data Integration

These dispersed data distributed into multiple database are integrated into say, data warehouse. Its purpose to prepare the raw data for further processing. In normal practice, a table format with attributes (column), records (row).

Sampling

Most of data mining models involve complex algorithm and frequently compute. If the data size is too large to be analyzed, sampling is indispensable to reduce the time spending on computation. There are several methods such as simple random, systematic sampling, clustering to achieve reducing the amount of data.

Data Exploring

So as to observe the structure of the data as well as detect whether missing and noisy data exist, we first explore the data.The data could be described and summarized with statistics. Most common method is taking average of values of one of the attributes. It is no doubt that human is more sensitive to graphics and image rather than figures. Visualizing the data to charts and graph is also aid to discover the patterns or distribution.

Data Cleansing

In reality, there is no "perfect data". If missing data and outlier is found, a series of ways which are introduced in the articles about data quality to handle these. It aims to ensure the data quality and thus results of data mining.

Data Partition

It is not a must in the model building since it is mainly suited for supervised learning model. Data source is "vertically" divided into training, validation, testing dataset. Training data which target values is known is used for training our model. Validation data will be injected in the model built by training data and then compare the performance (e.g. accuracy for classification/prediction) and select or the best model. The best model is fitted with testing data and generate the corresponding results to evaluate the model.

Model Building

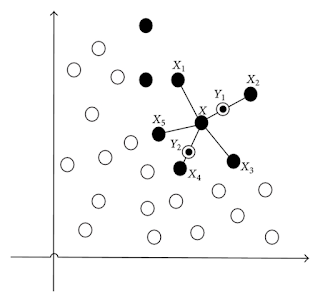

There are a great deal of models built for different purposes such as classification, prediction, clustering, association analysis. The models for classification could consist of both supervised and unsupervised learning model. The former include, for example, Logistic Regression, Artificial Neural Network (ANN), K-Nearest Neighbors (KNN), Support Vector Machine (SVM) while The later could be Clustering, Self-organizing Map (SOM) etc. Popular models to predict interval variables contains Linear Regression, Decision Tree, ANN, SVM etc.

Model Assessment

Of course, the performance of the model should not be optimized for building it just only once time. Based on the properties of algorithm and indicators of performance (like ROC curve), kicking out some data and adjusting the parameters in algorithm so that models could function more well. Therefore, it steps back to data cleansing part until the model tends to perfect.

Deployment (Implementation)

The best model are deployed to generate the data mining results. For instance, a classification model would be fed to get the estimated class of target variables.

Interpretation

Having obtained the data mining results, it is able to be interpreted to be new knowledge or information.

You might feel it is much complicated and vague to understand if you are beginning to learn data mining. Don't worry! More details and functions of the process will be further discussed in other articles.

How does it work? To understand about the big picture of data mining process, this is visualized as below:

Data Integration

These dispersed data distributed into multiple database are integrated into say, data warehouse. Its purpose to prepare the raw data for further processing. In normal practice, a table format with attributes (column), records (row).

Sampling

Most of data mining models involve complex algorithm and frequently compute. If the data size is too large to be analyzed, sampling is indispensable to reduce the time spending on computation. There are several methods such as simple random, systematic sampling, clustering to achieve reducing the amount of data.

Data Exploring

So as to observe the structure of the data as well as detect whether missing and noisy data exist, we first explore the data.The data could be described and summarized with statistics. Most common method is taking average of values of one of the attributes. It is no doubt that human is more sensitive to graphics and image rather than figures. Visualizing the data to charts and graph is also aid to discover the patterns or distribution.

Data Cleansing

In reality, there is no "perfect data". If missing data and outlier is found, a series of ways which are introduced in the articles about data quality to handle these. It aims to ensure the data quality and thus results of data mining.

Data Partition

It is not a must in the model building since it is mainly suited for supervised learning model. Data source is "vertically" divided into training, validation, testing dataset. Training data which target values is known is used for training our model. Validation data will be injected in the model built by training data and then compare the performance (e.g. accuracy for classification/prediction) and select or the best model. The best model is fitted with testing data and generate the corresponding results to evaluate the model.

Model Building

There are a great deal of models built for different purposes such as classification, prediction, clustering, association analysis. The models for classification could consist of both supervised and unsupervised learning model. The former include, for example, Logistic Regression, Artificial Neural Network (ANN), K-Nearest Neighbors (KNN), Support Vector Machine (SVM) while The later could be Clustering, Self-organizing Map (SOM) etc. Popular models to predict interval variables contains Linear Regression, Decision Tree, ANN, SVM etc.

Model Assessment

Of course, the performance of the model should not be optimized for building it just only once time. Based on the properties of algorithm and indicators of performance (like ROC curve), kicking out some data and adjusting the parameters in algorithm so that models could function more well. Therefore, it steps back to data cleansing part until the model tends to perfect.

Deployment (Implementation)

The best model are deployed to generate the data mining results. For instance, a classification model would be fed to get the estimated class of target variables.

Interpretation

Having obtained the data mining results, it is able to be interpreted to be new knowledge or information.

You might feel it is much complicated and vague to understand if you are beginning to learn data mining. Don't worry! More details and functions of the process will be further discussed in other articles.

Comments

Post a Comment